Predictive Analytics: Finding the Future in Big Data

As the old English proverb says, “necessity is the mother of invention.” When it comes to modern business intelligence goals, these words of wisdom ring truer than ever.

The availability of big data to business users already facile with reporting tools now necessitates analytic solutions that define trends and predict future events. Accurate models about consumer behavior, for example, can lead to better product development and marketing decisions that help companies compete and win. Predictive analytics (PA) is thus the key to unlocking more decisions from data.

The availability of big data to business users already facile with reporting tools now necessitates analytic solutions that define trends and predict future events. Accurate models about consumer behavior, for example, can lead to better product development and marketing decisions that help companies compete and win. Predictive analytics (PA) is thus the key to unlocking more decisions from data.

“Predictive analytics is the practice of extracting information from existing data sets in order to determine patterns and predict future outcomes and trends. Predictive models and analysis are typically used to forecast future probabilities with an acceptable level of reliability. Applied to business, predictive models are used to analyze current data and historical facts in order to better understand customers, products, and partners, and to identify potential risks and opportunities for a company.” – Webopedia

Closely related to traditional business intelligence (BI) and analytics, PA is a growing trend benefiting from technological innovation and seeing an exponential increase in use cases. Insurance and financial industries for example, rely heavily on forecasts, and many are now making investments in predictive analytics a top priority. Using PA to properly assess risks based on actuarial data and proven hypotheses can mean the difference between new product ROIs and catastrophic liability. Weather models forecasting everything from hurricanes to sea-ice melt allow scientists to measure the effects of climate change and illustrate future scenarios. Crime prevention, genomics, human and knowledge performance indicators, natural resource exploration, project management, and other disciplines have stakes in PA.

Driving Growth

There are many ways PA can help your business grow, too. PA can provide valuable insight into consumer buying habits and patterns … empowering a company to forecast if and when prospective customers need incentives, as well as the proper timing of promotions or relevant ads that will resonate with return customers. For example, a computer tablet manufacturer needs to understand all of the reasons why its products are purchased. Durability statistics and competitive pricing data can be used to predict the right time to offer coupons for replacement models.

There are many ways PA can help your business grow, too. PA can provide valuable insight into consumer buying habits and patterns … empowering a company to forecast if and when prospective customers need incentives, as well as the proper timing of promotions or relevant ads that will resonate with return customers. For example, a computer tablet manufacturer needs to understand all of the reasons why its products are purchased. Durability statistics and competitive pricing data can be used to predict the right time to offer coupons for replacement models.

As online shopping has become prevalent, retailers must collect and store the massive amounts of data being generated, and comprehend it to gain meaningful insight and actionable objectives.

Going Mobile

Mobile devices only compound the amount of data companies must deal with, and force them to leverage multiple platform strategies — for both inbound data collection and output promotional campaigns simultaneously.

In e-commerce and web marketing, predictive models can be used with geolocation technology to gauge experiences and expectations. Social network purveyors are already implementing analytics into location data to predict future events and forecast popular trends. Software and hardware vendors are integrating location-based analytics with their business intelligence suites to address this need.

“Decision making and the techniques and technologies to support and automate it will be the next competitive battleground for organizations. Those who are using business rules, data mining, analytics and optimization today are the shock troops of this next wave of business innovation.” -Tom Davenport, Competing on Analytics

Big Data

To process various sources of “big data” for PA, some companies have begun to leverage the open source Hadoop paradigm for distributed computing:

“The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.” – Apache

Google invented some of its underlying technology to index collected information, examine user behavior, and improve the performance of its search and display algorithms. Yahoo has also made use of it on a wide scale.

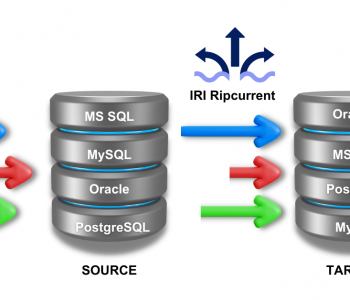

Although Hadoop can process large amounts of data in different structures, its users must be fairly advanced and learn several different technologies. As with early databases that required custom code to process data, very few “out-of-the-box” applications run seamlessly or easily with Hadoop. For this reason, IRI’s big data preparation (packaging, protection, and provisioning) platform, CoSort, does not require Hadoop, nor carry its ramp-up curves or maintenance costs.

PA Tools & Preparation Alternatives

A compelling open source solution for modeling and analyzing prepared data is through a new language called R from Revolution Analytics. Like SAS and SPSS, R uses big data computing and statistical analysis to model and mine data. According to the community website inside-R [edit: now defunct], “R is the leading language and environment for statistical computing and graphics.” The R Project involves “an international ecosystem of academics, statisticians, data miners, and others committed to the advancement of statistical computing.”

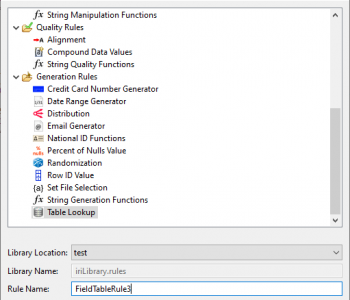

There are actually many PA tools available — some more capable, scalable, and affordable than others. Advanced reporting solutions from IRI are worthy of consideration because analytics can be combined in the same product, place and I/O pass with data transformation, conversion, and protection.

The SortCL program in the IRI CoSort product or IRI Voracity platform, for example, allows users to quickly sort, join, and aggregate values from multiple table and file sources simultaneously, allowing DBAs to virtualize or materialize variables they want to relate. SortCL can also filter, cleanse, enrich, mask, and reformat disparate data into moving aggregate and statistical reports that display trends from production (while de-identifying PII).

CoSort/SortCL “data franchising” or preparation activities have made advanced BI and PA display tools perform better since 2003, and without the need for Hadoop. SortCL’s fast mash-up and data blending activities hand digestible CSV, XML and table results off to those tools, removing what would otherwise be big data transformation and synchronization burdens for them or their users. For those with Hadoop, many of the same jobs can also run seamlessly in MapReduce 2, Spark, Spark Stream, Storm or Tez through Voracity’s VGrid gateway.

For more real-time preparation-to-dashboard results, SortCL integrates with BIRT in the IRI Workbench GUI, built on Eclipse. Consider this PA example with linear regression that leverages SortCL and BIRT. Additional visualization options through IRI Workbench jobs include a Splunk add-on, and a tie-up to the DW Digest cloud dashboard. R is also ready for Eclipse via Walware’s free “StatET” plug-in, and is thus easy to use in that same GUI for correlation analysis on CoSort/Voracity-prepared data, too.

Regardless of the tool used to facilitate predictive analytics, one fact remains: PA affects all of us, every day. It may be largely unseen, but it’s a determining factor in millions of decisions. PA is harnessing the power of big data, and taking businesses and other organizations one step closer to becoming the fortune tellers they yearn to be.

Regardless of the tool used to facilitate predictive analytics, one fact remains: PA affects all of us, every day. It may be largely unseen, but it’s a determining factor in millions of decisions. PA is harnessing the power of big data, and taking businesses and other organizations one step closer to becoming the fortune tellers they yearn to be.

1 COMMENT

[…] Predictive Analytics: Finding the Future in Big Data […]