Test Data Management: Test Data Needs Assessment (Step 2…

This article is part of a 4-step series introduced here. Navigation between articles is below.

Step 2: Test Data Needs Assessment

Once the questions of who needs test data for what — and who will be dealing with it along its lifecycle are answered (see Step 1) — a deeper dive is needed into the specific technical aspects of the data itself.

Here are some of the topics to consider and questions to answer:

- Purpose – What specific test sets are needed, and for what purpose? For example, how many test tables are needed, and for which database? What kind of files (and in what format) are required to thoroughly test the application or platform?

- Properties – What are the essential attributes of those sets; i.e., what layouts, data types, and value ranges must be in them? Can you build new composite or master data values and formats on the fly; for example, in defining a custom date, phone or ID number layout using specific component strings and values?

- Sources – Where should that data come from? What internal file and table sources are available, and what else is needed? Should the test data come from production and/or third-party sources, or is random generation of new values (or selection of existing ones) acceptable? You should be able to make the decision at the column level for every target, and apply a common source to multiple targets with similarly-named columns.

- Security – What internal regulations or governmental data privacy laws apply to the source data? If production data is used, how will you mask it while maintaining realistic appearances and relationships to other elements (e.g., primary and foreign keys that must join)? Would a random pull of column values be compliant if other elements were de-identified?

- Volumes – How much data is needed for each target set (e.g., how many rows per table, fact vs. dimension) in order to reflect reality or stress the application? Are you accounting for future growth and, if so, at what rate? Will you have an easy way to re-build a larger set?

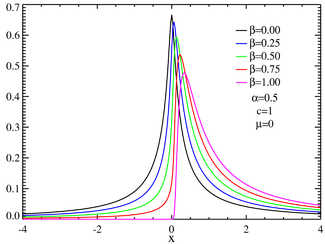

- Distributions – Should values occur at random, or in specific frequency occurrence patterns? What kind of distribution is expected?

- DB Attributes – Is referential integrity required? Are there certain types of values or ranges needed to satisfy triggers or special constraints? What about bad or null data values, or bad dependencies?

- Conformance – Are there compatibility issues to consider in the data itself, like big or little endian characteristics? Must it adhere to a standard (e.g., a valid XML file structure, or DB-specific date format)?

- Destination – Upon generation, where do the test sets belong for optimal use? For example, should output go to a holding area for later deployment, directly to test tables, into a report, or more than one place? If the data is huge, it could save time to build it on the same (DB) server.

- Transformation – Is it desirable that manipulation of the test data, like sorting, cross-calculation, aggregation, or reporting, happen in conjunction with its generation? Can you apply specific generation, selection, or formatting rules to like fields across different target tables or files?

Once these kinds of questions are considered, decisions can be made about the test data generation method(s) necessary to produce what’s needed.

See Sharon’s article for some of the considerations around test data realism. Check out the capabilities of IRI FieldShield for masking production data, or IRI RowGen for generating safe, intelligent and referentially correct data from production metadata that supports the testing qualities above.

Click here for the next article, Step 3: Test Data Generation & Provisioning, or here for the previous step.