Masking PANs in Credit Card Images

Abstract: Previously the DarkShield API for Files could perform black-box redaction of primary account numbers (PANs, also known as CCNs) on credit cards only within defined areas in the image 1. The accuracy of credit card number detection, and thus masking, however, has now been enhanced thanks to new OCR-A support in DarkShield. These addresses use cases where PAN locations vary among images, and when masked or synthetic PANs are needed in testing.

DarkShield and Optical Character Recognition

IRI DarkShield is a solution built to provide data protection in semi-structured and unstructured data files. By searching for and identifying sensitive information in these types of files, DarkShield is able to provide precise masking control over files using various masking operations.

It is important to note that unstructured data does not have to mean text in text files. Text in images and text in images embedded in documents are all viable targets for DarkShield. To scan and detect sensitive data in images the DarkShield API uses a technology called optical character recognition (OCR).

How OCR Works

OCR works by processing a scanned image and analyzing the areas where there are black and white pixels in order to identify characters. Normally a process called thresholding occurs ahead of time to preprocess the image into a black and white image.

Each character is segmented into its own individual images. Then during the recognition phase, individual characters and words are identified based on a score assigned to them.

OCR and Credit Card Font

Normally OCR relies on machine learning to recognize characters from groups of contours and shapes detected in an image. Machine learning is a powerful tool that provides an accurate and flexible method for character recognition.

That said, machine learning is also limited by the trained models it uses. A broad but easy-to-understand example would be that a model trained on detecting cars cannot be used to detect a motorcycle.

Similarly, the Tesseract OCR models trained for detecting characters in images have challenges recognizing the characters of a credit card because they may be in a special font. This special credit card font is referred to as OCR-A font. To deal with special fonts, models need to be trained with large data sets to learn how to recognize characters in special fonts.

A common alternative for recognizing characters in an exotic font is template matching. Template matching can be useful in certain situations, like in recognizing PANs, and is available as an alternative in the DarkShield API for detecting these characters in credit cards.

What is Template Matching?

Template matching is a technique used to find matches or close matches in an image using a template image as the reference. In the context of OCR, template matching is used to help recognize optically processed characters in images.

This technique can be a very simple but effective method used for matching on handwriting or characters of a particular font.

Template matching requires a template image containing characters in the target font. Using the template image as the base comparator, OCR will process the actual image to be parsed by using a sliding technique.

![]()

Reference image for OCR-A font used in template matching.

This process of sliding a template image from left to right, up to down, one pixel at a time, calculates how well a match has been discovered at each location. The index of the best match is recorded as the recognized character.

Sliding across an image and matching based on the template image

To learn more about OCR template matching, follow this link.

Configuring the DarkShield-Files API Call

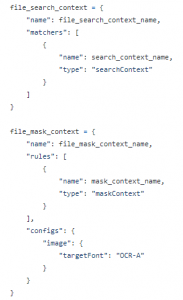

To begin with, calls must be made to the DarkShield-Files API to create a search context, mask context, file search context, and file mask context. These contexts tell the API how it will search for PII, what masking operations should be applied on found PII, and special configurations to be used based on file types.

Search and mask context

In the search context shown above, a credit card matcher named “CcnMatcher” is defined and uses a regular expression pattern matcher to identify PANs. A mask context defines rules to indicate the masking function that will be used, and rule matchers to match rules with search matchers.

In the setup above, a format-preserving encryption (FPE) rule called “FpeRule” is created and a rule matcher that pairs the “CcnMatcher” with the “FpeRule” is created and called “FpeRuleMatcher”.

File search context and file mask context

File search contexts and file mask contexts allow for file-type specific configurations to be passed as part of the context. In the setup above, there is a configuration for image files that specify that template matching will be used in conjunction with OCR.

Results of Search and Masking

Original credit card image

Credit card image with redacted numbers

From the comparison of the before and after images we can see the PANs have been identified and masked using black-box redaction. To view the full source code, see the credit card demo hosted in IRI’s GitHub repository. Contact darkshield@iri.com if you like more information.

- Note that the DarkShield-Files API can also mask account and routing numbers in checks by supplying an API call with the coordinates where these numbers are located (the location of these numbers are always the same place). This can be seen in the following article where the DarkShield-Files API demonstrates the ability to mask credit card numbers and checking account and routing numbers by replacing the sensitive data in images with newly generated realistic data. Alternatively, the data can be simply redacted with a black box.