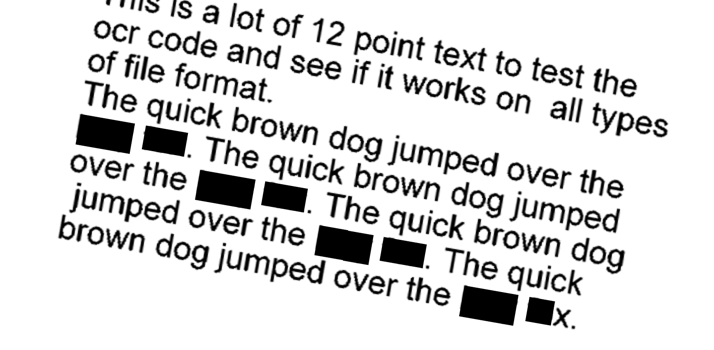

Preprocessing Images to Improve OCR & DarkShield Results

Optical Character Recognition (OCR) software is technology that recognizes text within a digital image. OCR is used by IRI DarkShield software to recognize text in standalone or embedded images during PII searching and masking operations.

OCR does have its limits however; for accurate results, it requires the image to be vertically aligned, sized properly, and as clear as possible. Not every image meets those requirements!

We must therefore find and use methods to adjust these images to meet our needs through preprocessing. This article discusses a few preprocessing techniques, and how they can improve the quality of OCR output in a DarkShield data masking context.

Pre-Process Method 1: Image Scaling

Image scaling is the easiest method to understand and consists of enlarging or shrinking an image to the desired size.

(fig. 1) Original image of a medical scan.

(fig. 1) Original image of a medical scan.

Figure 1 above shows an X-ray image that is 800×800 pixels and Figure 2 below shows the Tesseract OCR output of the image. Tesseract missed the age and the date of birth:

(fig. 2) Tesseract OCR text detected from original image.

(fig. 2) Tesseract OCR text detected from original image.

This can be resolved by increasing the size of the image, which in turn will enlarge the text enough for Tesseract to recognize it.

(fig. 3) Code for scaling an image by a factor of 2 and saving the new image.

(fig. 3) Code for scaling an image by a factor of 2 and saving the new image.

By importing the Pillow library, we can increase the size of the image while also maintaining the same aspect ratio. Maintaining this ratio is important because if we were to set the image to a specific size of X by Y pixels, we could potentially warp the image and do more harm than good.

The code from figure 3 doubles the size of our X-ray image from 800×800 pixels to 1600×1600 pixels. Feeding the resized image into Tesseract results in more data being captured as seen in figure 4:

(fig 4) Date of birth was able to be detected correctly by the Tesseract OCR engine after scaling the (800×800) image by a factor of 2x.

(fig 4) Date of birth was able to be detected correctly by the Tesseract OCR engine after scaling the (800×800) image by a factor of 2x.

It is important to note however that increasing the image size too much has diminishing returns, as seen in figure 5 where the X-ray image was increased by a multiple of 3x.

(fig 5) Increasing the scale to 3x for a total resolution of (2400×2400) pixels actually decreased the OCR accuracy.

(fig 5) Increasing the scale to 3x for a total resolution of (2400×2400) pixels actually decreased the OCR accuracy.

The amount by which an image must be increased or decreased is highly dependent on the size of the text displayed in the image.

Pre-Process Method 2: Binarization

Binarization is the method of converting a color image into an image that consists of only black and white pixels; i.e., where the black pixel value = 0 and the white pixel value = 255.

This can be done by applying what is known as an Otsu threshold (named after Nobuyuki Otsu) where normally that threshold=127, half the pixel range between 0 and 255). If the pixel value is greater than the threshold, it is considered a white pixel, else a black pixel.

The Tesseract OCR engine applies Otsu binarization internally when processing images. Because of this, pre-processing with Otsu binarization is unnecessary. Nevertheless, binarization may not even produce desirable results, as seen here:

(fig 14)

(fig 14)

For cases like this where the page background is of uneven darkness, using a different binarization algorithm such as adaptive thresholding may improve OCR results. In adaptive binarization, the threshold is not the same throughout the image; the threshold for a pixel in this method is based on a small region around instead of a global threshold for the image.

Binarization can also be used in other ways, including preparing an image to be deskewed. This is because binarization can help the computer recognize text so that the angle of that text can be detected more easily.

Pre-Process Method 3: Deskewing

Running physical documents through a scanner can result in a digital image that is slightly tilted, or skewed. To get the most accurate OCR output, we want to square up, or vertically align the input image as much as possible.

To address the problem, we must calculate the angle by which the text is skewed, then counter- rotate that image. To do this, first we apply gray-scaling, blurring, and binarization to the image.

(fig 6,7)

(fig 6,7)

The original image above has a white background and black text, but after being prepped the image background is inverted and slightly blurred (temporarily to calculate where the text is).

Next, to find the location of the text we dilate the white pixels to merge the text into blocks so we can find the largest block and apply a bounding box around it.

(fig 8) Otsu binarization applied to help detect where the text is located.

(fig 8) Otsu binarization applied to help detect where the text is located.

(fig 9)

(fig 9)

There are many methods to calculate the skew angle of the text, but the easiest is to take the largest text block and use its box angle. The final step is to just determine the angle of the box and then correct for it as seen in the following figures:

(fig10) Determine the angle of the skew.

(fig10) Determine the angle of the skew.

(fig 11) Rotate the image by the skew angle that was detected

(fig 11) Rotate the image by the skew angle that was detected

(fig 12)

(fig 12)

As you can see in Figure 12, the image is now de-skewed and we can now feed it through Tesseract to perform OCR:

(fig 13) Tesseract OCR accuracy was improved after correcting the skew in the image.

(fig 13) Tesseract OCR accuracy was improved after correcting the skew in the image.

Figure 13 shows that the skewed image did not produce any text in Tesseract, but the “fixed” version of the image captured it all perfectly. It should be noted that given this image, Tesseract can detect text with 100% accuracy within an angle of +/- 2 degrees.

Automation of this process is possible if the angle is tested on each image. If there is a difference after dividing the angle of the text in the image by 90 degrees, then the image is skewed. If the skew angle is greater than or equal to a specified threshold for triggering deskewing, then deskewing is performed.

If you would like to learn more about how the deskewing process works, click here to find the original source code and the breakdown.

Using Image Preprocessing Pipelines with the DarkShield API

This demo available in GitHub demonstrates how to integrate the preprocessing methods discussed in this article with the DarkShield API for (image) files.

The demo program allows either a single image or a folder of images to be specified to preprocess. Each image is first preprocessed, sent to the DarkShield-Files API for searching and masking, then post-processed to restore it to the original image.

In such cases, the image still retains any black boxes that were placed by the DarkShield-Files API to mask sensitive data. Note that if applying adaptive binarization among the preprocessing methods, it is not possible to restore the image to its original coloring.

The images are saved in a directory named masked that will be automatically created if it does not exist when running the demo. Additional arguments may be specified to the program that determine if a pipeline should be used.

The default is to only rescale images if they are smaller than 1600 pixels in width, and only deskew images if the skew angle is greater than 5 degrees. These specific values can be modified by specifying command line arguments to the program main.py. The width in pixels can be specified with a -w flag, and the angle in degrees can be specified with a -a flag.

The -b flag specifies whether adaptive thresholding should be used among the pipelines. Even if -b is specified as true, a calculation will still be performed to determine if the image has uneven brightness.

If the image does not have uneven brightness, this preprocessing method will not be applied as it would have no benefit to OCR accuracy. The -t argument specifies an integer between 0 and 100 that should be used for adaptive thresholding (the default value is 25).

The demo includes a folder with a few example pictures. One picture is skewed, one has uneven lighting, and one is in need of resizing to improve the text that is recognized by OCR.

The results of running the three images in the folder through the demo program are shown below:

Final result of a skewed image that was preprocessed, sent to the DarkShield API, and post-processed.

Final result of a skewed image that was preprocessed, sent to the DarkShield API, and post-processed.

Final result of an image in need of scaling that was sent through a pipeline program that pre-processes, sends to the DarkShield-Files API, then post processes. The preprocessing helped DarkShield correctly find (match, and mask) the date of birth.

Final result of an image in need of scaling that was sent through a pipeline program that pre-processes, sends to the DarkShield-Files API, then post processes. The preprocessing helped DarkShield correctly find (match, and mask) the date of birth.

Original image with uneven lighting.

Original image with uneven lighting.

Result of sending original image to DarkShield API without any preprocessing.

Result of sending original image to DarkShield API without any preprocessing.

Without preprocessing, many of the entries specified as sensitive are found and redacted. However, there are several entries that should have matched that did not due to inaccuracies in text detection by the OCR engine. These include Davi in the Surname, Longwood University, Farmville, VA, and Marta near the bottom of the image.

However, when the image is preprocessed with adaptive thresholding binarization (and using the same search matchers and Tesseract OCR model), these entries are now matched correctly and redacted. Unlike other preprocessing methods, adaptive thresholding binarization cannot be reverted with post processing.

Any of these image preprocessing methods can be modified or expanded upon to meet the specific needs of different images. Due to the diverse content that can be in an image, there is not a single preprocessing pipeline that can be the most effective for every possible image.

DarkShield allows for versatility in how image preprocessing can be applied prior to sending images to its API. Any programming language capable of interacting with the DarkShield API through HTTP requests can be used.

In a future DarkShield API release, it is possible that additional image preprocessing methods will be baked into the API itself, eliminating the need to incorporate them into ‘glue code’. For example, image rotation and deskewing is already automatically handled by the DarkShield API.

Alternative Solutions for OCR Inaccuracy

Also consider that even in ideal conditions, OCR is not guaranteed to be 100 percent accurate. Because of this, it may be worth erring on the side of caution when setting up search matchers.

Fuzzy set search matchers can be set up that allow for entries to be still matched even if OCR misses, adds, or misinterprets a specified number of letters. Regular expression patterns can be modified to adjust for potential errors in the text identified by OCR.

On the very cautious side, the (.*) pattern can be specified to match all text identified by OCR, but that may redact parts of the image that are not desired to be redacted. Generally, most OCR failure in accuracy is in detecting what the letters of the text are, not where the text is.

Other Solutions Being Considered for Development

Finally, additional search matching methods such as fuzzy regular expression matching and set elimination are being considered as additional search matching options. These are specifically designed to address the issue of some letters of words being incorrectly identified by OCR.

In fuzzy regular expression matching, a threshold percentage can be specified. In typical regular expressions, the result is binary: there is either a match or not a match. With fuzzy regular expressions, if an 80% threshold is specified, and a six-letter word is off by one letter from matching the regular expression, the word will still be matched (as 83% is > the 80% threshold).

Set elimination is the inverse of the existing set matcher search method. In set elimination, a text file containing a list of entries separated by newlines can be given, and text that does not match any of these words will be matched.

For example, a set containing all English (or other language) words found in a dictionary can be given. In this case, all entities, SSN, or other numbers will be matched, but any words that would be found in a dictionary of the language will not be matched. This search method errs on the side of caution, making it a good fit for the imperfections of OCR.

If you have any questions or feedback around this article, please contact darkshield@iri.com.