Incremental Data Masking and Mapping: Detecting Changes and Updating…

Incremental data replication, masking, integration (ETL), and other data refresh operations are common in frequently updating database environments. These jobs require the detection of additions and updates to tables. Such dynamic operations are easy to automate in IRI Voracity workflows designed and run in IRI Workbench (WB).

This article contains a workflow example that Voracity, FieldShield, CoSort, or NextForm DBMS edition users can implement to regularly check for changes in an source table (Oracle in this case) to decide when to move data into a new target (MySQL). It also shows how data can be masked conditionally as part of this process. Note that IRI also has a log-based approach compatible with several RDBs called Ripcurrent, to increment data in real-time without the need for delta column values; see this article for more information.

Changes can be loaded into different databases or files using a task-scheduled batch file or shell script. This can be done using a timestamp and specific fields in the source table. Error checking is also included and can also be acted upon.

This example was created and run on a Windows machine. It can be easily modified to work on a Linux or Unix platform.

Creating the batch file is easy using a Voracity Flow diagram in WB. In this example, the source table contains columns named CREATED_DATE and UPDATED_DATE that are important in this job.

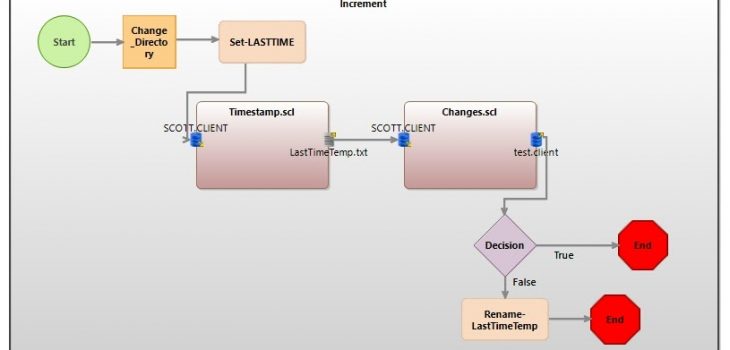

The image below shows the steps that are contained in the batch file. To summarize:

- the job is run in a specific directory

- an environment variable is set using the timestamp of the last job run

- the current timestamp is recorded

- current changes are captured

- the error level is checked and acted upon if successful or not

- the current timestamp overwrites the last run timestamp

- a rule to redact emails ending in “edu” is used in the target

- a rule to partially redact the SSN field is used in the target

- the changed data is loaded into MySQL

- the error level is checked, the temp timestamp file is renamed

Each task block in the workflow is explained below. The two mauve blocks are transform mapping blocks and represent CoSort SortCL job scripts. The mapping diagrams and job scripts represented by each of the blocks are shown below for clarity. For the how-to on building Voracity workflows from the palette, see this article.

Change Directory

This block changes the current working directory to the one specified.

Set LASTTIME

This command line block sets an environment variable called LASTTIME. The value set to the variable is the contents of the file LastTime.txt. The timestamp in this file is the timestamp that was recorded during the last run of this job. If this is the first run, this file will have to be made manually with an arbitrary timestamp dated before this job is run.

This file contains one line: “2008-09-10 09:39:23.5”

Timestamp.scl

This task uses the SortCL program to query the source database for the current time. That timestamp is saved to a file called LastTimeTemp.txt. The reason it is stored in a temp file is so that both the current and last time stamps can be preserved until error checking occurs.

It is important that the timestamp comes from the database, and not the local machine. This avoids problems where the database and the execution environment are not synchronized.

The mapping diagram and serialized script for this job are as follows:

Changes.scl

This job does the main extract, transformation, load. Input is the source table in Oracle and output is a similarly-formatted table in MySQL:

In the input section, a query is submitted to the source table for all records that have a CREATED_DATE or UPDATED_DATE greater than the environment variable LASTTIME. The query is “SELECT * FROM SCOTT.CLIENT WHERE CREATED > TO_TIMESTAMP(\’$LASTTIME\’, \’YYYY-MM-DD HH24:MI:SS.FF1\’) OR (UPDATED > TO_TIMESTAMP(\’$LASTTIME\’, \’YYYY-MM-DD HH24:MI:SS.FF1\’))”.

Additionally, a condition is added to check the EMAIL column for data ending in “edu”. This will be used in a conditional data masking function in output. In the output, an If-Then-Else statement is added to the EMAIL column. It uses the previously created condition to test the data. If the data ends in “edu”, then the email address is redacted. If not, the email address is copied from input.

A second redaction function is used in the SSN column. It redacts the first three characters, leaves the dash, redacts the next two characters, leaves the dash, and leaves the last four characters. For example ***-**-6789.

Below is the serialized SortCL job script described above so you can examine the query and conditional syntax involved in the incremental deltas:

Error CoSort

The decision block checks the variable ERRORLEVEL to make sure it returned 0 (for success) after running the SortCL job above. If it did not, the job continues to the END block where the job is terminated. If it returns true, the job continues to the next block.

Rename LastTimeTemp

This command block copies the contents of LastTimeTemp.txt to LastTime.txt. This records the previously captured current timestamp in the file to be used for the next job run.

Batch File

The batch file and transformation scripts are created when the Flow diagram (shown above) is exported. A copy of the batch file is below. Each block adds executable lines to the batch file.

Task Scheduler

Using the Windows Task Scheduler, this batch file can be executed repeatedly to capture the changes in the source database.

Conclusion

With a little planning, and the use of command blocks, changes to a database table can be detected automatically using a batch file, and then scheduled to run at selected intervals so that you can move, map, mask and otherwise manipulate changed data on an incremental basis.

Contact voracity@iri.com or your IRI representative for more information or help with your use case.