Production Analytic Platform #3/4: Processing Real World Data

This is part 3 of a 4-part series on Production Analytics.

Processing on Par with Information [Part 1]

Data Processing Drives Efficiency [Part 2]

Unifying the Worlds of Information and Processing [Part 4]

The inclusion of full function data processing in the Production Analytic Platform simplifies the task of gathering data from external sources such as the Internet of Things and clickstream data that requires both intensive exploratory modeling as well as high-speed application and maintenance of those models on real-time and streaming data. A podcast and a video supporting these concepts can be found here.

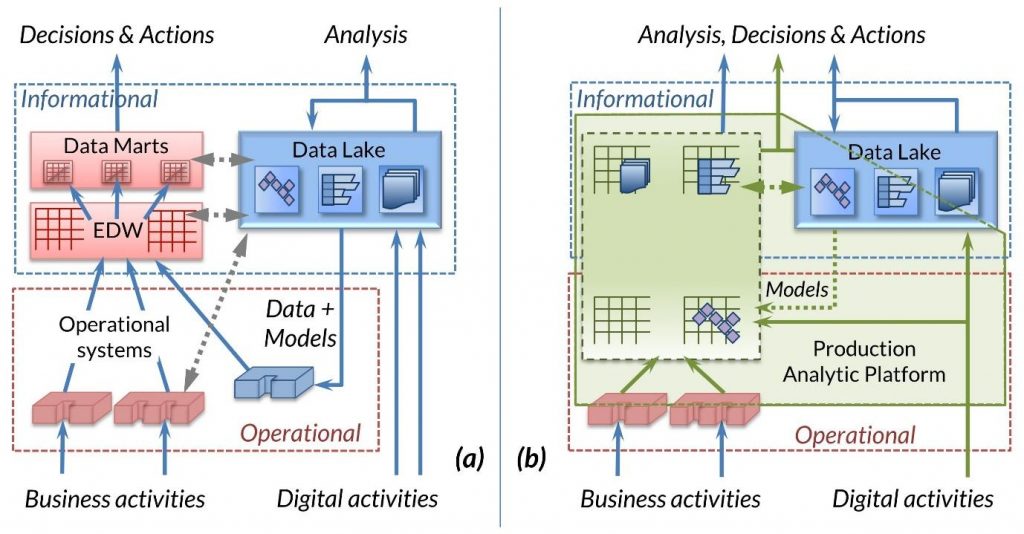

In the first two articles of this series, I focused on the traditional world of the data warehouse and the operational systems feeding it, shown on the left-hand side of Figure 1a below. The conclusion was that using a powerful data processing tool, such as IRI Voracity, enables the de-layering of the warehouse and simplifies the preparation of data from the operational environment. The result, the Production Analytic Platform is shown on the left of Figure 1b.

With the advent of “big data”, this has become a much smaller part—at least in terms of data volumes—of the data processing environment of every business. Despite claims to the contrary, it remains an essential part of the IT systems because it represents and contains the legally and financially binding record of the business. Its efficient and reliable functioning remains a key measure of success for IT. As shown in the previous blog post, a comprehensive and powerful data processing tool can contribute to this goal.

But what of the new world of digital activities harvested from external sources? Can a focus on processing rather than storage help here? The short answer is “yes” but first we must review how the processing of digital activities works today and how it differs from traditional data processing.

Capturing Digital Activities

The right-hand side of Figure 1a represents the current approach to all forms of analytics as applied to data generated by the digital activities of people and things in the real world. Events and measures generated by sensors in the Internet of Things (IoT) stream into the data lake in enormous volumes at high speed. Messages shared on social media, as well records of human activities on the web, also flow into the data lake. The two arrows labeled “Digital activities” are deceptively simple representations of a complex process of the data capture, streaming and processing steps involved particularly in the case of IoT data. Once gathered, this vast reservoir of data is subjected to analytics of many types to discover and define statistical models that identify potentially valuable trends or patterns in the data.

While such analysis may often be worthwhile in its own right, it is rather common to apply the models on a continual, (near) real-time basis to the ongoing business. For example, a year’s worth of sensor data from a fleet of trucks is analyzed as above to correlate certain patterns of temperature, oil pressure and vibration with various component failures. Creating such models is exploratory, informational work performed by skilled data scientists.

The value of these models is only realized when they are applied to real-time data streaming in from trucks on the road, allowing predictions to be made about when a component is likely to fail and enabling preventative maintenance to be planned and undertaken. This second phase of analytics is highly operational in nature. Planning maintenance requires information about the schedule of the truck and the value of its jobs, the location of spare parts, and the availability of skilled mechanics, among other factors.

This work is represented in Figure 1a by the transfer of data and models into the operational environment and subsequent reporting via the data warehouse. Today’s actual implementations vary widely. In some cases, data and models are exported to another system. Sometimes, operational data is copied into the data lake, so the real-time analytics can run there. Combinations of these approaches also exist. All, however, involve complex arrangements of copies of often voluminous data.

Re-envisioning Operational Analytics

The Production Analytic Platform, shown in Figure 1b, offers a conceptually simpler architecture. Observe that the feed from the external world is now represented by a single bifurcated arrow: data is fed through a single data processing component to both the data lake and the optimized Production Analytic Platform storage. What is less obvious is that with such an approach, data can be transported and processed all the way from the sensor systems within a single environment, such as IRI Voracity, which can run on all platforms from the edge processors to the central systems.

With Voracity, this entire process can be defined and managed from a single Eclipse-based Workbench where all required metadata and process steps can be designed and maintained graphically or through a straightforward 4GL editor. A single, integrated set of jobs runs in the Production Analytic Platform to:

- Capture data streamed from sensor systems and other real-time sources (e.g., pipes, Kafka, web services) in message-oriented formats

- Perform initial selection and basic analysis of data on an edge server to reduce volumes transported and perform local functions such as immediately responding to urgent events and edge analytics

- Transport and preprocess all arriving data into a form suitable for model discovery analytic tools in the data lake

- Transport and preprocess the subset of data required for operational analytics in the optimized Production Analytic Platform storage

- Provide direct reporting to business users without the need for dedicated data marts as described in the previous blog article

- In addition, model management (including stored metadata, data classes, ERDs, jobs, and rules) — the dotted line labeled “Models” figure 1b — can be supported via a metadata management hub

As in the case of de-layering the data warehouse, the conclusion here is that, by focusing on data processing, we can simplify the current environment and garner new benefits in data management and production efficiency.

Next Up… Unifying the Worlds of Information and Processing

In the next and final post of the series, we explore virtualized access to the Production Analytic Platform and wrap up with a summary of the benefits and possibilities of this approach.