Migrating RDB Data via the Data Vault Generator

IRI Workbench includes a Data Vault Generator wizard to assist users of the IRI Voracity platform in migrating a relational database model to a Data Vault (DV) architecture. The wizard has three output options depending on the needs of the user.

- The first option (also included with the next two options below) creates the Entity Relationship Diagram (ERD) for Data Vault Modeling. This option also geneartes the Data Definition Language (DDL) script Data Vault 2.0 to facilitate your relational database migration (e.g., star schema) to the Data Vault model .

- The second option, and the focus of the article, will create a DDL for the Data Vault tables that do not exist. It also creates the job scripts to load the data from your source tables into the new Data Vault 2.0 target tables, and it generates the ERD for the target tables.

- The third option will create a DDL for the Data Vault tables that do not exist and load the new tables with randomly generated test data (and show the new ERD). Information on that process is covered in this article.

According to Dan Lindstedt, the inventor of Data Vault, “The Data Vault is a detail oriented, historical tracking and uniquely linked set of normalized tables that support one or more functional areas of business. It is a hybrid approach encompassing the best of breed between 3rd normal form (3NF) and star schema…. The Data Vault is a data integration architecture; a series of standards, and definitional elements or methods by way information is connected within an RDBMS data store in order to make sense of it.”

There are three types of tables in the DV standards. A Hub contains the unique business keys. A Link defines the relationships between the business keys. A Satellite contains the context (attributes) of the table, and can be a child of either a hub or link table. In each of these tables, a hash of the key’s raw data is used as the primary key of the new table.

Each of these tables also includes the source of the original data and a load timestamp for historical tracking. A Satellite also contains an end timestamp and an optional hash difference for tracking changes to the records.

The Data Vault Generator wizard uses the existing primary (PK) and foreign (FK) keys as a starting point for organizing the new tables. The defaults are as follows:

- A hub for each PK key (including compound keys).

- A link for each self-referencing FK.

- A link for each group of FKs (minus the self-referencing keys).

- A satellite on the hub if the table contains zero FKs.

- A satellite on the link if at least one FK exists.

In this example, seven related tables will be used representing human resource data. Below is the ERD for the source tables.

Setup

The wizard starts with the setup page where the details of the job are defined. As stated earlier, the output for this example is Load source data. There are two hashing type options: MD5 and SHA-256. Snowflake is selected as the loader. Leaving the loader blank will create delimited file outputs.

Check the Include last seen date to include this type of column in the hubs and links. This is needed if the source system does not provide auditing or CDC.

Check the Include hash diff to include this type of column in the satellites. The hash difference column records a hash of the data. This is recorded so that when any changes are made to the data and a new record is loaded, there is a way to determine that the new record is indeed different.

Check the Show summary page to display the details of the job at the end of the wizard. Because the wizard examines the table dependencies, the display of this page may take a while to load if there are a large number of related tables.

Table Selection

This page is where the source tables are selected. In this example, the seven source tables are in an Oracle database.

Hub Options

The hub options page opens with a list of the existing primary keys, the proposed new table name, and the business key columns populated by the existing primary key. The table name and columns can be edited. This is helpful when the business key is not the primary key.

For instance, the REGION_NAME is a more descriptive business key than REGION_ID which is what the PK uses. Additionally, the order of the columns can be modified. This is important during the hashing phase.

Link Options

This page is populated from the existing foreign keys; however, is updated with the business keys selected on the previous page. Each entry can be edited.

In the case of a self-referencing key, the type of link can be selected. While the structure remains the same, the table name changes depending on the type.

Satellite Options

As stated previously, the satellites connect to either a hub or link. This page loads with the Data Vault standard that the satellite is attached to a hub if there are no foreign keys, and attached to a link if there is at least one foreign key.

Loading

On this page, the details for the load are selected. This page is not shown if there is no loader selected on the setup page. It is possible to choose the same or a different location for the target tables.

If the tables already exist in a different target, the output mode can be either Create for truncate first or Append. In this example, the tables do not exist. There is also an option to create a drop script which is helpful during testing.

Summary

If activated, the summary page lists the details for the job.

Output

When the wizard finishes, it produces multiple files to run the job and opens the ERD. What was seven source tables, turns into twenty target tables – seven hubs, six links, and seven satellites.

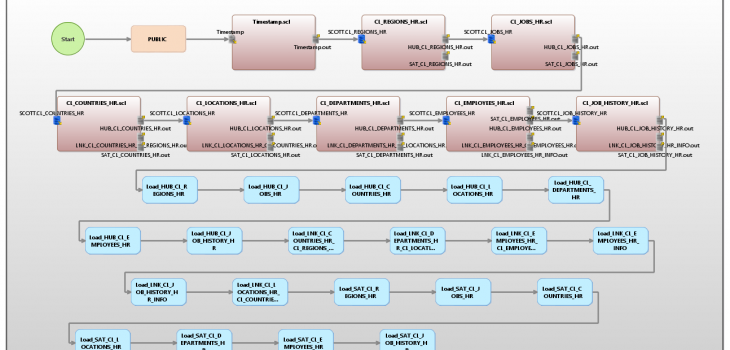

The flow diagram shown below represents all the pieces that comprise the migration job. They include an SQL file to create the new tables, a script to record the timestamp of the job, seven SortCL data transformation scripts to retrieve and reformat the data, and twenty loader files.

The CL_EMPLOYEES_HR script shown below reveals the extract-transform-load statements that load the data from the original CL_EMPLOYEES_HR table into data files for the four new DV tables. In the Inrec section, the concatenations and hashes needed for DV are created.

Because these calculations happen in the CoSort-powered SortCL engine and not the database, there is no need for a separate staging step. This job sorts the data on the business key of the table while discarding duplicates.

In the output sections, flat files for the loader are created for the new tables using the appropriate fields for each of those tables.

The Data Vault Generator in IRI Workbench makes the process of prototyping a Data Vault project more automated. After loading the table defaults, edits can be made to choose the business keys and their order.

This job also creates the new resources, creates the timestamp, concatenates and hashes the key fields, and loads the new resources. For more information, please contact voracity@iri.com.