Test Data Management: Test Data Sharing & Persistence (Step…

This article is the last of a 4-step series introduced here.

Step 4: Test Data Sharing & Persistence

Being able to modify, deploy, store, and re-use test data is important. It may not have been that easy to create in the first place (Steps 1-3). Why go through more rounds of specification and/or generation than you have to? If more people can make use of the test data, why shouldn’t they?

Recommendations:

- Have a repeatable process for creating the test data again. The data may have been lost, deleted, or obsolesced. If you are relying on production data for access and masking in the first place, consider the risk of those things ahead of time, and consider the ‘generation from scratch’ alternative.

- Make sure your test data build processes can be easily automated and modified. Projects created in a complex programming environment (especially a legacy one unfamiliar to you) can make test-data job management difficult. For example, it may not be easy to schedule, repeat, change, or secure (compliance-check) the creation flow parameters if you are not familiar with the automation facility. Better to have clear job flow components based on command-line calls, which you can manipulate and plug into batch flows or preferred automation tools.

- Favor accessible, explicit generation programs and metadata definitions for the target layouts. Be able to read and understand what the generation process is doing, both in terms of structures and procedures. Many times the attributes of test data values are only accessible from GUIs or, worse, modular wizards only. That GUI could be inconvenient to keep re-running, or it may be unfamiliar or inaccessible. Ideally, you will also have serialized 4GL text scripts, defining the generation process which you could modify and re-run with a permanently licensed executable (hint: RCL).

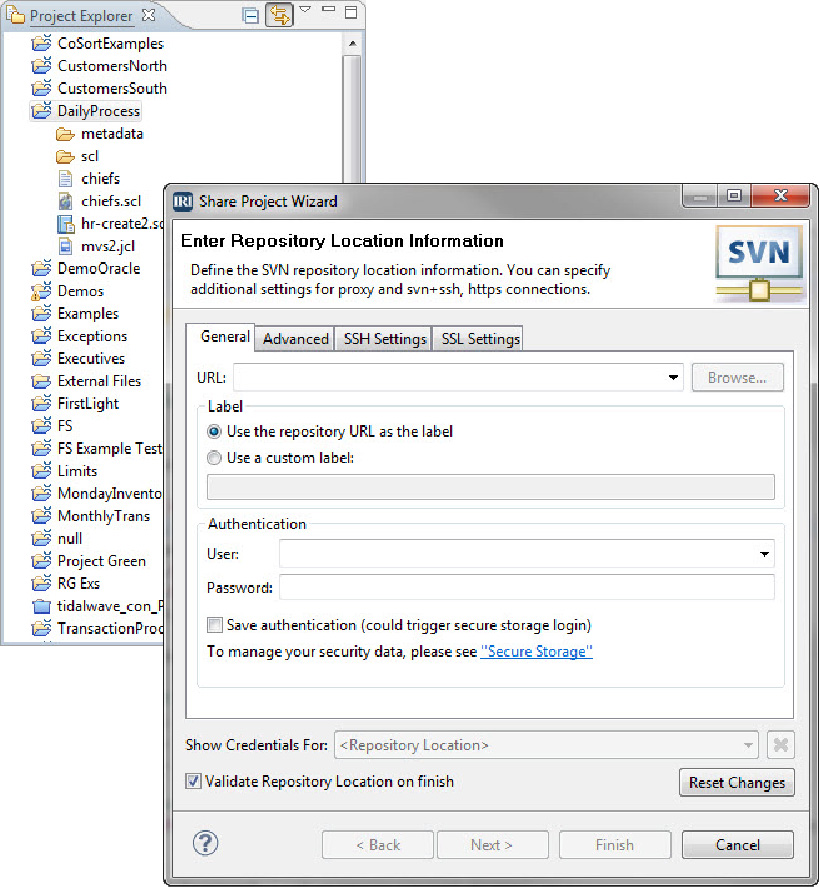

- Manage the scripts and test data in the same IDE. This facilitates iteration between specification and generation, and allows you to share the job scripts, build and population flows, and actual test data in centralized repositories. In the case of IRI RowGen, all of these assets can be managed, modified, re-used, and version controlled in Eclipse. The IRI Workbench GUI exposes free plug-ins like Subversion (below) and EGit (here), which you can use to mange RowGen scripts and other resources.

- Make the test data accessible from more places. If the data is compliant, and it’s convenient to do so, why not specify outputs beyond its primary target (e.g. table/s), if there can be other uses for it? In RowGen, for example, you can define test file targets in multiple formats (and each with different properties) while you are also creating ODBC, ODA, or bulk load feeds. This creates opportunities for federated test data, and distributed applications needing test data within the same process and I/O pass for the original beneficiary. This is not a trivial savings when m/billions of rows per target are being generated.

- Leverage the 7 data provisioning methods in the prior article to visualize and virtualize IRI test data in multiple platforms. In addition to the standard targeting options (multiple database, file format, message queues, and cloud silos) supported, many third-party ETL, analytic, CI/CD, replication, and test data management platform technologies can launch IRI test test data creation (masking, subsetting, and synthesis) jobs, and consume their results directly, thanks to API-level and other published methods of integration

- Consider the nexus between test data generation and production data processing. How useful would it be for metadata defining the test data formats to exist when real data in the same format(s) materialize? This would let you re-use the layouts in future applications. Test data layouts specified in RowGen .RCL or .DDF scripts are plug-compatible into: IRI CoSort SortCL jobs for data transformation and reporting on real data; IRI Voracity ETL jobs (where mappings can be previewed with test data); IRI NextForm for data and database migration; and, IRI FieldShield for masking sensitive data in RDBs and flat files. The same data definitions are also supported in Quest (erwin / AnalytixDS) Mapping Manager, and the Meta Integration Model Bridge (MIMB), so that third-party BI, CRM, ETL and modeling tools can use them.

Click here for the previous step.