? The Case for Safe, Intelligent Database Test Data

Database Test Data Usage – This blog caught my eye because of its title, Do the right thing when testing with production data. It struck me as oxymoronic, since we know production data should not be used for testing at all …

Of course we know how tempting it is to use production data for testing applications, simulating databases, prototyping ETL operations, and just about anything else that needs to work with the real thing. However, it is not worth the legal risk of exposing that data to breaches. And, even if you were certain that you could lock that data down somehow, have you considered its potential inadequacy for testing purposes? If you only develop and test with data that’s real today, what happens when your platform has to work with different data tomorrow? Ideally, you need to be able to stress-test new platforms, and that means taking into account future data changes in values, formats, ranges, and/or volumes.

Security Active’s article did, however, acknowledge the data breach risk, and suggested that the right thing to do was to obfuscate the production data with appropriate protection tools and scripts — like IRI FieldShield — which would encrypt, mask, pseudonymize or otherwise de-identify the production data to make it safe for testing.

And that is certainly a popular, though I submit not necessarily the best, approach. What happens if a security method is compromised (e.g. the decryption key is learned or the permutation algorithm reversed)? Or what if the test data is safe, but ends up looking unrealistic or being referentially incorrect? And how would morphing 100,000 social security numbers in one fact table, for example, help you test an enterprise data warehouse that will need to handle 50,000,000 numbers distributed and linked across multiple tables?

Better if you could generate file, report, or database test data without using production data at all, but where that test data has:

- realistic (but still not real) data with the right data types, layouts, and values

- referential integrity in that it preserves primary-foreign key associations

- the ability to scale to any volume and satisfy any data range requirement

- automatic, pre-sorted loads to multiple table or file targets simultaneously

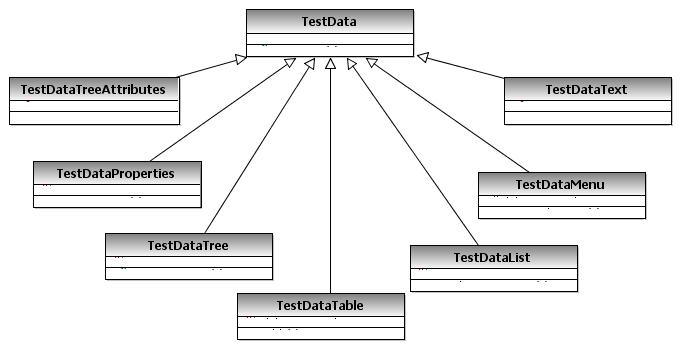

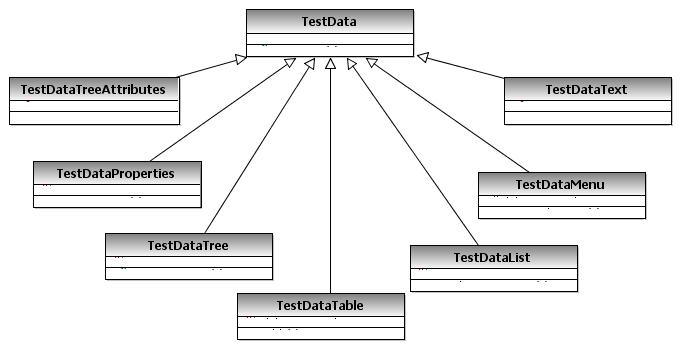

Within the IRI Workbench GUI, built on Eclipse™, IRI RowGen combines data model parsing, script generation, and target table loading of big, safe, intelligent test data that is also structurally and referentially correct. This means that you can create test data that conforms to metadata specifications, privacy regulations, business rules, and stress-testing requirements.

Watch this space for updates on RowGen, and in the meantime,

Do not use production data for testing.

Do not use production data for testing.

Do not use production data for testing.

There, I said it three times.

Click to see an overview of IRI RowGen: