An Introduction to Data Mining

Note: This article was originally drafted in 2015, but was updated in 2019 to reflect new integration between IRI Voracity and Knime (for Konstanz Information Miner), now the most powerful open source data mining platform available.

Data mining is the science of deriving knowledge from data, typically large data sets in which meaningful information, trends, and other useful insights need to be discovered. Data mining uses machine learning and statistical methods to extract useful “nuggets” of information from what would otherwise be a very intimidating data set.

Data mining spans multiple computer and mathematical disciplines. It is not so much a unitary process as it is an umbrella term for a set of actions. Four broad tasks that are performed while mining include: exploratory data analysis (EDA), descriptive modeling, predictive modeling, and pattern discovery.

EDA uses conventional statistical visualization methods or unconventional graphical methods to see if anything interesting can be found in the data.

In descriptive modeling, the data is passed to a routine and yields verbs (data generators) or adjectives (data descriptions) that are behind the formation of the data. This includes methods that associate the data with a probability distribution, clustering, and dependency modeling.

Predictive modeling uses regression and classification methods to set up a standard for predicting future unknown data points. Regression is a purely mathematical analysis that fits an equation to a data set in order to predict the next value. Predictive modeling can also rely on pattern rules and relationship (or even specifically identified cause and effect) trends that were discovered using the Logical Analysis of Data (LAD) method.

Pattern discovery via LAD classifies new observations according to past classifications of the observations and uses optimization, combinatoric and Boolean functions to improve analysis accuracy.

For the most part, these methods can only indicate which data entries are related, but not the reasons why or how they are related. It is possible to explain what characterizes one class/cluster from another by finding these rules or patterns, and the topics are listed in various ways depending on the data itself.

Applications for data mining can range from business marketing to medicine, from fraud detection in banking and insurance to astronomy, from human resources management to the catalog marketing industry, and so on. The medical profession has found it useful for distinguishing between attributes of people with different disease progression rates. Retail stores are now using data mining to better understand consumer spending habits, noting which items are bought together and their relation, as well as the best way to advertise to their customers. And much of the corporate world now relies on data mining to calculate, execute and justify major business decisions.

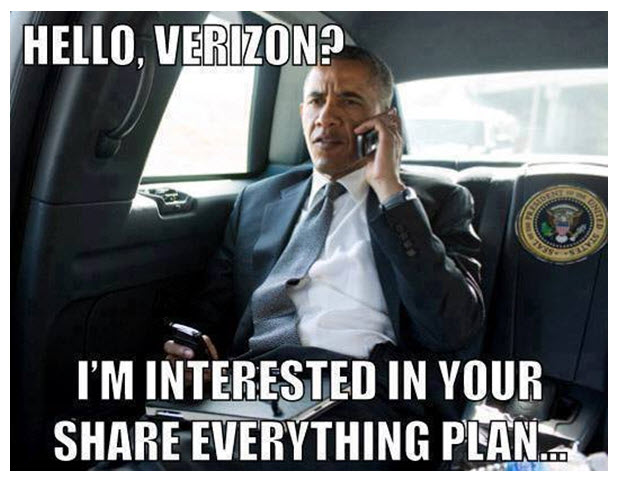

However, as everyone now knows from recent intense media coverage of the NSA-Verizon telephone records scandal, data mining can also be extremely controversial. Just in case you’ve been living under a rock, here is a brief synopsis:

On June 5th, 2013, the British daily newspaper called The Guardian published an exclusive report that millions of customer records from Verizon, one of the largest telecommunications providers in the U.S., were collected by the U.S. National Security Agency, in response to a classified order from the U.S Foreign Intelligence Surveillance Court. Verizon’s Business Network Services was forced to hand over all telephony metadata created by the mobile service provider within the U.S. and abroad. As a result, bi-partisan and universal criticism of the Obama administration then erupted from civil rights advocacy groups and news media outlets, claiming presidential abuse of executive power. No resolution of this incident is in sight as of the writing of this article. But it will, undoubtedly, remain as a prime example of how data mining can sometimes be viewed in a negative light, especially with regard to privacy concerns and the general public.

When dealing with large volumes of static or dynamic data, there will most certainly be computational and I/O-related performance issues. With databases containing terabytes and exabytes of data, combing through the data can take a lot of time, and the mining algorithms need to run very efficiently. Some other difficulties include overfitting and noisy data.

Overfitting usually means there is not enough good data available. The data model (in this case, the global description of the data) becomes too complex because has too many parameters relative to the number of observations. This exaggerates minor fluctuations in the data, thus compromising the model’s reliability as a basis for making predictions.

Noisy data, on the other hand, refers to too much of the wrong kind of data. Meaningless, erroneous, unstructured (unreadable) or otherwise corrupt data increases storage requirements and/or requires statistical analysis to be weeded out before it can hamper data mining accuracy. Good data mining algorithms take noisy data into account.

Data mining is a single step in a larger process known as knowledge discovery in databases (KDD). KDD first begins with data preparation: selection, pre-processing, and transformation of the data, where you determine what you want to study and set it up in a way that can be mined. That is representing data as an m—n matrix and with a numerical representation of the element of each data vector. Next, you mine. And finally, you get to use the old noggin to interpret and analyze that information. Then, if the hidden patterns and trends are still not clear enough, you must dig a little deeper.

IRI’s role in data mining and KDD process is to ready and re-structure big data for analysis through multiple high-performance data transformation functions. Specifically, the IRI CoSort data manipulation package can rapidly filter, manipulate, and reformat data so that it can be processed by data mining algorithms like these data mining software suites. CoSort is also the default data processing engine in the IRI Voracity data management platform, designed for a wide range of data profiling, preparation and wrangling work.

For those working with CoSort in the IRI Workbench GUI, BIRT is a free Eclipse plug-in with graphical reporting and business intelligence capabilities which include some analytics and mining features. Both CoSort and BIRT Analytics use the Eclipse IDE. With Open Data Access (ODA) data driver support going into CoSort, the data flow integration between the two plug-ins is also seamless and allows for more rapid what-if analyses.

For those working with the Voracity in 2019 and beyond, we suggest installing the core provider for the free Knime Analytics Platform into IRI Workbench. In the same Eclipse pane of glass, the Voracity source (provider) node for Knime can hand it off Voracity-prepared raw data in-memory to Knime nodes for applications requiring statistical and predictive analysis, data mining and machine/deep learning, neural network and artificial intelligence.

Contributors to this article include Roby Poteau and David Friedland